This article refers to the address: http://

Abstract: S3C24lO is a microprocessor based on ARM920T core of Samsung Company. It forms an embedded audio system through IIS audio bus and UDAl341 type CODEC to realize audio playback and acquisition. Give a description of the relevant hardware circuit and design points of the audio driver under Linux.Key words: S3C2410; audio system; Linux; driver; microprocessor; UDAl34l

1 Introduction In recent years, embedded digital audio products have been favored by more and more consumers. In consumer electronic products such as MP3s and mobile phones, people's requirements for these personal terminals have long been limited to simple calls and simple word processing. High-quality sound effects are an important trend in current development. Embedded audio systems are divided into hardware design and software design. The hardware part uses an audio system architecture based on the IIS bus. In software, embedded Linux is a completely open and free operating system. It supports a variety of hardware architectures, the kernel runs efficiently and stably, and the source code is open, with sophisticated development tools, providing developers with an excellent development environment.

In this paper, the S3C2410 microprocessor of Samsung and the UDAl341 stereo audio CODEC of Philips are used to construct the embedded audio system. The design of the related hardware circuit is given. The driver implementation of the audio system based on the Linux 2.4 kernel version is introduced.

2 Introduction to ARM920T and S3C2410

The ARM920T is a family of ARM microprocessor cores that uses 5-stage pipeline technology and is equipped with Thumb extensions, Embedded ICE debug technology and Harvard bus. In the case of the same production process, the performance can be more than twice that of the ARM7TDMI. The S3C2410 is an ARM9TDMI core microprocessor manufactured by Samsung using a 0.18 μm process. It has a separate 16KB instruction cache, 16KB data cache and MMU. This feature allows developers to port Linux directly to a target system based on that processor.

3 based on the IIS bus hardware framework implementation

The IIS (Inter-IC Sound) bus is a serial digital audio bus protocol proposed by Philips. It is a multimedia-oriented audio bus dedicated to data transfer between audio devices, providing a sequence of digital stereo connections to standard codecs. The IIS bus only processes sound data. Other signals, such as control signals, must be transmitted separately. In order to minimize the number of outgoing pins of the circuit, IIS uses only three serial buses: data lines that provide time-multiplexed functions, field select lines, and clock signal lines.

The hardware part of the entire audio system is mainly the connection and implementation of CPU and CODEC. This system uses Philips UDAl34l audio CODEC based on IIS audio bus. The CODEC supports the IIS bus data format and uses bit stream conversion technology for signal processing, with programmable gain amplifier (PGA) and digital automatic gain controller (AGC).

S3C2410 built-in IIS bus interface, can directly connect 8/16-bit stereo CODEC. It also provides DMA transfer mode to the FIFO channel instead of interrupt mode, allowing data to be sent and received simultaneously. The IIS interface has three working modes that can be selected by setting the IISCON register. The hardware framework described in this article is based on the transmit and receive modes. In this mode, the IIS data line will receive and transmit audio data simultaneously through the dual channel DMA, and the DMA service request is automatically completed by the FIFO read-only register. The S3C2410 supports a 4-channel DMA controller that connects the system bus (AHB) to the peripheral bus (APB). Table 1 lists the channel request sources for the S3C2410.

In order to achieve full-duplex transmission of audio data, channel 1 and channel 2 of the S3C2410 are required: receive data selection channel 1 and transmit data selection channel 2. The DMA controller of the S3C2410 does not have a built-in DMA storage area. Therefore, the DMA buffer area must be allocated to the audio device in the program, and the data to be played back or recorded is directly placed in the DMA buffer area of ​​the memory by DMA.

As shown in Figure 1, the IIS bus signal of the S3C2410 is directly connected to the IIS signal of the U-DAl34l. The L3 interface pins L3MODE, L3CLOCK, and L3DATA are connected to the GPB1, GPB2, and GPB3 general-purpose data output pins of the S3-C2410, respectively. The UDAl34l provides two sets of audio signal input interfaces, each of which includes two left and right channels.

As shown in Figure 2, the processing of the two sets of audio inputs inside the UDAl34l is quite different: the first set of audio signals is input and then sent to the digital mixer after a 0 dB/6 dB switch; the second set of audio signals After input, it passes through the programmable gain amplifier (PGA), and then samples, and the sampled data is sent to the digital mixer through the digital automatic gain controller (AGC). The second set of input audio signals is selected when designing the hardware circuit. Because it is hoped to realize the adjustment of the input volume of the system through the software method, it is obvious that the second group can be realized by controlling the AGC through the L3 bus interface. In addition, channel 2 can also be used to perform on-chip amplification of the signal input from the NIC through the PGA.

Since the IIS bus only processes audio data, the UDAl34l also has an L3 bus interface for transmitting control signals. The L3 interface is equivalent to the mixer control interface and can control the bass and volume of the input/output audio signals. The L3 interface is connected to the three general-purpose GPIO input and output pins of the S3C2410. These three I/O ports are used to simulate the entire timing and protocol of the L3 bus. It must be noted here that the clock of the L3 bus is not a continuous clock. It only sends out a clock signal of 8 cycles when there is data on the data line. In other cases, the clock line always remains high.

4 Audio driver implementation under Linux The device driver is the interface between the operating system kernel and the machine hardware, shielding the hardware details for the application. The device driver is part of the kernel and mainly performs the following functions: device initialization and release; device management, including real-time parameter setting and providing an operation interface to the device; reading data transmitted by the application to the device file and returning data requested by the application; Detect and handle errors in the device.

The audio device driver mainly realizes the transmission of the audio stream through the control of the hardware, and provides a standard audio interface to the upper layer. The audio interface driver designed by the author provides two standard interfaces: Digital Sound Processing (DSP), which is responsible for the transmission of audio data, ie, playing digital sound files and recording operations. The mixer (MIXER) is responsible for outputting audio. Mixing, such as volume adjustment, high and low sound control, etc. These two standard interfaces correspond to the device files dev/dsp and dev/mixer respectively.

The implementation of the entire audio driver is divided into initialization, open device, DSP driver, MIXER driver and release device.

4.1 Initializing and turning on the device The device initialization mainly completes the initialization of the UDAl34l volume, sampling frequency, L3 interface, etc., and registers the device. The following specific functions are done by the function audio_init(void):

Initialization of the S3C2410 control port (GPBl-GPB3);

Allocating a DMA channel to the device;

Initialization of UDAl34l;

Register the audio device and the mixer device.

Opening the device is done by opening the function open() to complete the following functions;

Set up IIS and L3 bus;

Prepare parameters such as channel, sample width, etc. and notify the device;

Calculate the buffer size based on the sampling parameters;

Allocate a DMA buffer of the appropriate size for use by the device.

4.2 DSP driver implementation

The DSP driver realizes the transmission of audio data, that is, the data transmission of playback and recording. The ioctl is also provided to control the DAC and ADC sampling rates in the UDA134l. The sampling rate is mainly controlled by reading and writing the sampling rate control register in UDAl34l, so the main part of the driver is to control the transmission of audio data.

The driver describes the state of the entire audio system through the structure static audio_state, the most important of which are the two data stream structures audio_in and audio_out. These two data stream structures describe the information of the input audio stream and the output audio stream, respectively. The audio input and output (audio playback and recording) are realized by the operation of audio_in and audio_out respectively. The main content of this driver is the design and implementation of the data stream structure. The structure should contain information about the audio buffer, information about the DMA, the amount of semaphore used, and the address of the entry register of the FIFO.

In order to improve the throughput of the system, the system uses DMA technology to directly store the sounds that need to be played back or recorded in the DMA buffer area of ​​the kernel. Since the DMA controller of the S3C2410 does not have a built-in DMA storage area, the driver must be audio in the memory. The device allocates a DMA buffer. It is critical that the buffer settings are reasonable. Take the write() function as an example. Because the amount of audio data is usually large, and the cache is too small, it is easy to cause buffer overflow, so a larger buffer is used. To fill a large buffer, the CPU has to process a large amount of data at a time, so that processing data for a long time is prone to delay. The author uses multiple caching mechanisms to divide the buffer into multiple data segments. The number and size of data segments are specified in the data stream structure. This divides the large data segment into several small segments, and each time a small segment of data is processed, it can be sent out through DMA. The same is true for the read function. The DMA can process a small amount of data each time, without waiting for the large buffer to fill up to process the data. Here also provides the ioctl interface to the upper layer call, so that the upper layer can adjust the size and number of the buffer data segment according to the precision of the audio data, that is, the data traffic, in order to obtain the best transmission effect.

4.3 MIXER driver implementation MIXER driver only controls the mixing effect, does not perform read and write operations, so MIXER file operation structure only implements one ioctl call, which provides the mixing effect of the upper layer setting CODEC. The driver mainly implements a structure struct UDAl34l_codec. This structure describes the basic information of the CODEC, mainly to realize the read and write function of the CODEC register and the control function of the mix. The ioctl in the MIXER file operation structure is implemented by calling the mix control function in U-DAl341_codec.

4.4 Uninstallation of the device The uninstallation of the device is done by the logout function close(). The logout function uses the device number obtained during registration and releases various system resources used by the driver, such as DMA and buffers.

5 Conclusion This article describes the construction of an IIS-based audio system in an embedded system to achieve audio playback and recording. The implementation of the CODEC hardware connection based on the Samsung S3C2410 microprocessor and the implementation of the audio driver under the embedded Linux are described in detail. The system has been implemented on the development platform based on S3C2410, which can smoothly play and collect audio and achieve good results.

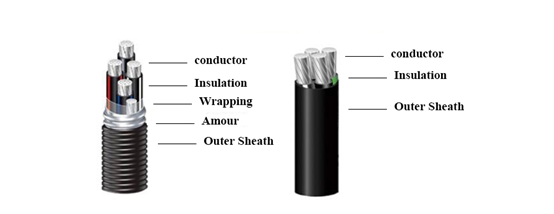

XLPE Insulated PVC sheath Alloy Cable is kind of cable, in which the rare earth high compressed aluminium alloy is used as conductor, and PVC is used as Jacket wound around. The insulation adopts XLPE material, featuring with fire & moisture resistance. The whole structure is designed and manufactured elaborately based on advanced international technology and equipment. It has completely independent intellectual property rights and removed the defects of aluminum cable systematically. It applies to both dry and moist places at the temperature of 90ºC and under. This cable presents excellent mechanical performance.

Standard: GA306 Jacket: PVC

Conductor: aluminum alloy Cores: single core or multicores

Insulation: XLPE Feature: flame retarded

Rated voltage: 0.6/1KV, 8.7/10KV, 8.7/15KV, 26/35KV

Advantages:

- Conductor fatigue resistance

- Light weight

- Easy installation

- Long life span

- Flame retarded

- Decent mechanical strength

- Chemical & acid resistance

- Creep resistance

- Impact resistance

- Easy to bend

- Fire resistant

- Zero halogen & low smoke

- Corrosion & abrasion resistant

- ...

Application:

- For civil use

- For commercial use

- For industrial use

- Schools

- Large venus

- And many more...

Welcome to visit our factory to learn more about us. If you have any questions, please feel free to contact us.

XLPE Insulated PVC Sheathed Alloy Cable

XLPE Insulated PVC Sheathed Alloy Cable,XLPE Insulated Al Alloy Cables,Aluminum Alloy XLPE Cables,Al Alloy Armoured XLPE Cables

Fujian Lien Technology Co.,Ltd , http://www.liencable.com