To achieve automatic car driving, many legal, social and structural obstacles need to be eliminated. Although almost all of us have seen "auto-driving" cars in science fiction or movies, it's another matter to believe that machines or on-board computers can wander around us under all conditions. In addition, the issue of liability in the event of a collision will need to be carefully verified, as human problems may no longer be involved (we are usually the wrong or illegal group). Ideally, all cars on the road will have automatic driving. Unfortunately, this may take several generations of car development to become a reality. Finally, and quite importantly, to achieve automotive-environment communication (cars and infrastructure, as well as car and car communication), a lot of investment is required to install and maintain the infrastructure and standardize the way communication is done (everyone Speak the same "language").

But for now, we are concerned about the technical feasibility of autonomous vehicles. Today, we are accustomed to passive safety systems such as anti-lock braking systems (ABS) and airbags, or electric power steering systems and electronic engine management. These systems enable the car to take action (brake, steering, acceleration), but what is the motivation for the action? Although Advanced Driver Assistance Systems (ADAS) are not yet available in all cars, these systems will play a vital role in the evolution from driving a car to auto-driving because they are equivalent to the eyes of a car.

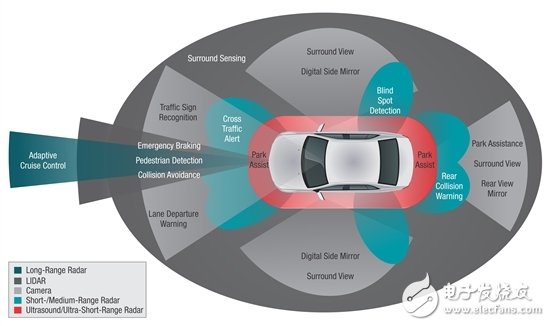

A wide variety of new sensors need to be deployed as an infrastructure for ADAS, especially for machine vision sensors such as camera systems, radar and LIDAR. The use of these sensors will provide the car with the situational awareness needed, but the interpretation of a large amount of new data will also challenge the system's processing power. For example, acquiring and processing each pixel of the camera image (low-level processing), identifying and identifying objects of interest in the picture (intermediate processing), and then making decisions based on these targets and their relative car position and movement (advanced processing) These all require different processing functions. Typical microprocessing and digital processing techniques excel at performing one of these specific tasks, but often have limitations for other tasks. For machine vision, all of this processing must be performed in real time, which requires heterogeneous processing capabilities such as the TI TDA2x System-on-Chip (SOC) product family.

Now, because the car can see, can understand what to do, and can act accordingly, so we have all the basic modules we need. For more on autopilot, machine vision and heterogeneous processing, read the white paper: Making cars safer through technological innovation.

Pwm Wind Solar Hybrid Controller,Waterproof Solar Charge Controller,auto solar charge controller

GuangZhou HanFong New Energy Technology Co. , Ltd. , https://www.zjgzinverter.com